Dynamic log management

In nullplatform, you can use an advanced log system that lets you take control of your logs without modifying your applications. This control is aimed at defining policies that specify which defaults should be followed, then allowing for policy overrides in emergency situations, granting increased visibility for a specified period of time.

How to use it?

The first step in using the log system is to create a default log policy. To do this, use the Log Configuration API. For all applications running on nullplatform, you will have the selector option to use NRN patterns, meaning you can create policies for the account, namespace, or even a specific scope. It's possible to have multiple policies and combinations thereof, meaning you can establish a default behavior for a nullplatform account while a specific application has a different behavior.

Regarding log configurations, the following defaults can be established:

- log_level: (FATAL, ERROR, WARN, INFO, DEBUG, TRACE)

- error_sampling: 0-100 (100 is all logs passing through)

- no_error_sampling: 0-100 (100 is all non-logs passing through)

- max_errors_per_minute: after sampling, maximum amount of errors per minute

- max_no_errors_per_minute: after sampling, maximum amount of non-errors per minute

For example, a potential default configuration might look like this:

{

"is_default": true,

"selector": {

"nrn":"organization=4:account=17:namespace=36"

},

"configuration": {

"log_level":"error",

}

}

Once this is done, all scopes within the namespace 36 will only log errors by default.

How to override the policy

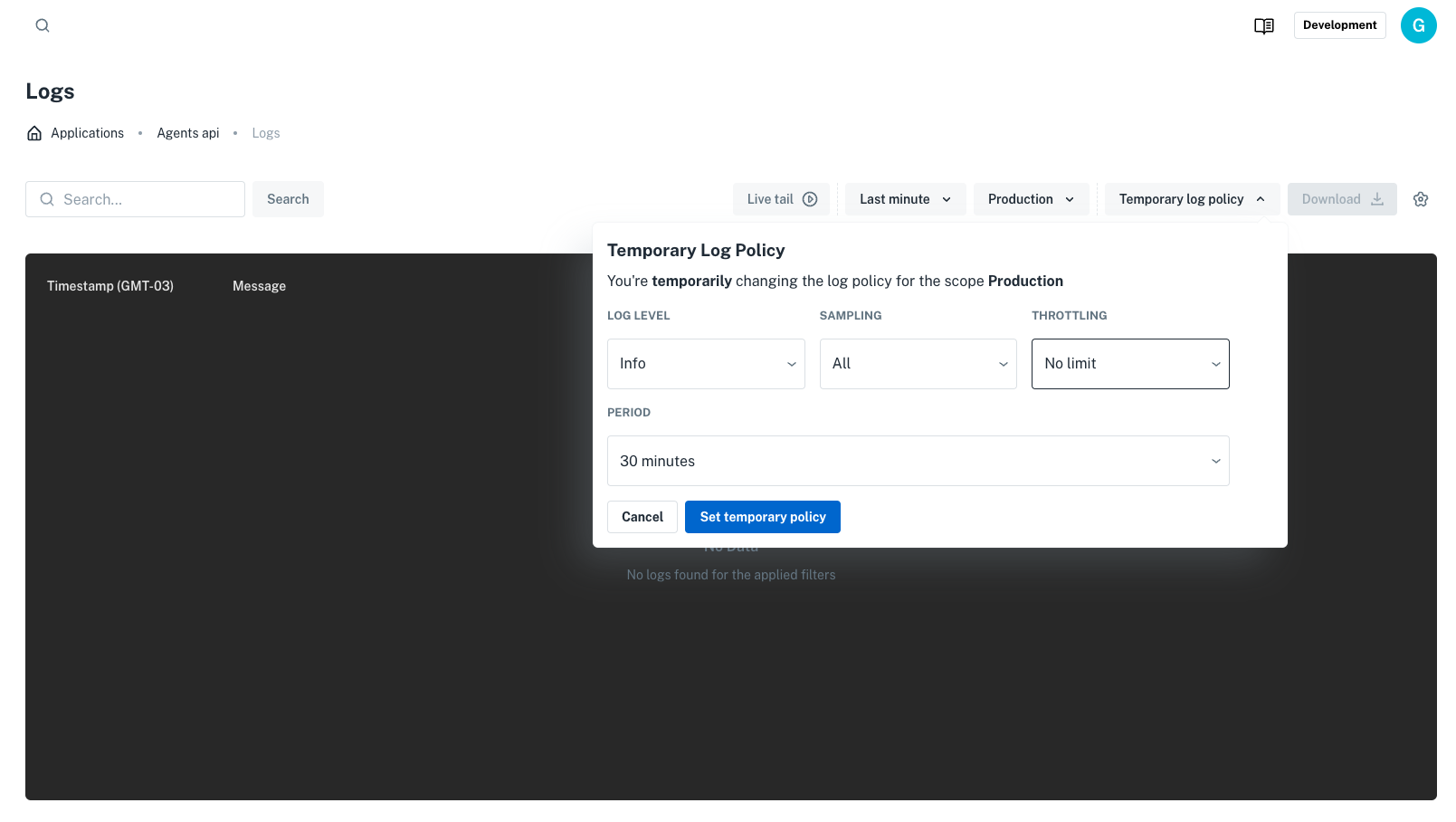

Once the default policy is defined, you can perform a temporary override directly from the logs screen, as shown in the image:

If desired, this can also be done via API by specifying the expiration date of the override policy:

{

"is_default": false,

"selector": {

"nrn":"organization=4:account=17:namespace=36:application=58:scope=9984"

},

"configuration": {

"log_level":"info",

},

"expires_at": "2021-12-31T23:59:59Z"

}

How does it work under the hood?

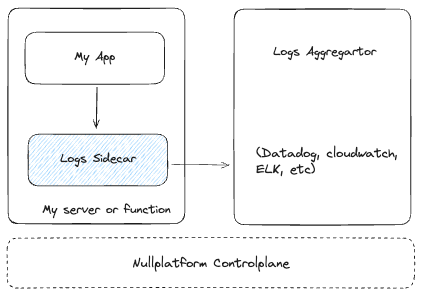

The log architecture looks like this:

In this setup, regardless of whether applications run on instances, Kubernetes, or Lambda, logs are sent to a local sidecar responsible for filtering the logs before sending them to the aggregator (CloudWatch, Datadog, etc.). This sidecar is in charge of applying the log policies defined in nullplatform, which acts as a control plane in the image. This sidecar internally analyzes the logs to interpret the log level and apply sampling and throttling.

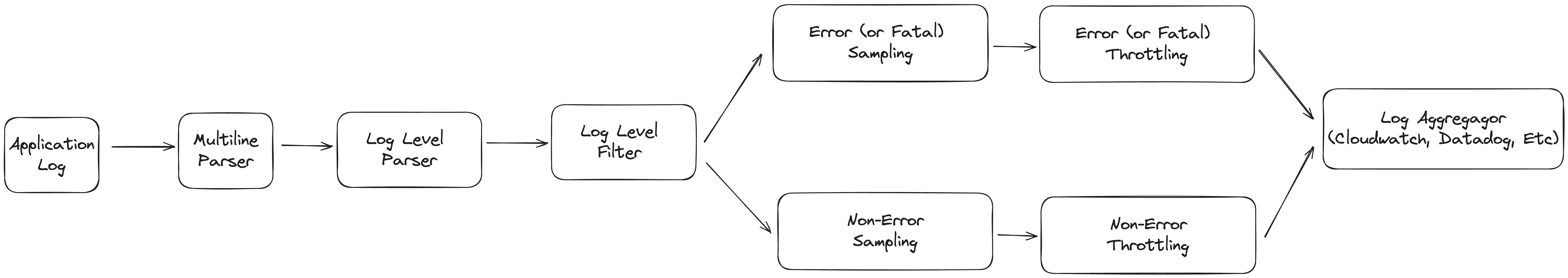

By default, the log pipeline executed in the sidecar (and therefore before the logs leave the instance, container, or function) is as follows:

Advanced usage

Internally, the sidecar performs dynamic configurations of various log processors (depending on the environment), allowing for dynamic modification of the pipelines to perform additional functions, such as filtering logs that match a certain expression or adding logs. To do this, you can modify the configuration templates using the Templates API. Because the implications of this can be dangerous, we suggest contacting your nullplatform support representative before making modifications.

Extras

We published a blog post exploring different approaches to logging and the impact of adopting Dynamic Logs Management (link to blog).